CI with Docker and TeamCity

September 18, 2015

Recently I’ve been working on setting up the build infrastructure for StorReduce. We have a diverse range of test, build and deployment scenarios including unit testing, integration testing, producing binaries and RPM’s, producing virtual appliances for various infrastructures and deploying our website, and I expect this to grow in the future.

I decided to use my go-to Continuous Integration (CI) solution - TeamCity. I’ve only ever had good experiences with TeamCity - it’s incredibly mature and it continues to get better with age, it’s reliable and highly configurable and it Just Works™.

Given the diverse range of builds we want to push through TeamCity it was desirable to be able to compartmentalize the software on the build servers. It can quickly get out of hand having to ensure a growing list of requirements (golang and tooling, hugo, aws cli, rpmbuild, test utils, debian packaging tools, ansible, python… etc.) are configured and installed correctly on each build server in a CI pipeline. That’s where Docker comes in.

Docker and CI are a match made in heaven. Using Docker, each build in the pipeline can be run in a tailor-made, isolated and consistent environment every single time. Each build server can be configured to have the bare minimum requirements installed (OS, build agent software and Docker) and the rest can be pulled in via a Docker image based on the build that is running. This makes the build infrastructure much easier to provision and maintain.

Unfortunately, Docker is not natively supported in TeamCity, however, there is a brilliant open source plugin called TeamCity.Virtual that adds a Docker target to TeamCity builds. To install the plugin follow the simple instructions here. Additionally, you will need to ensure Docker is installed on each of your build servers.

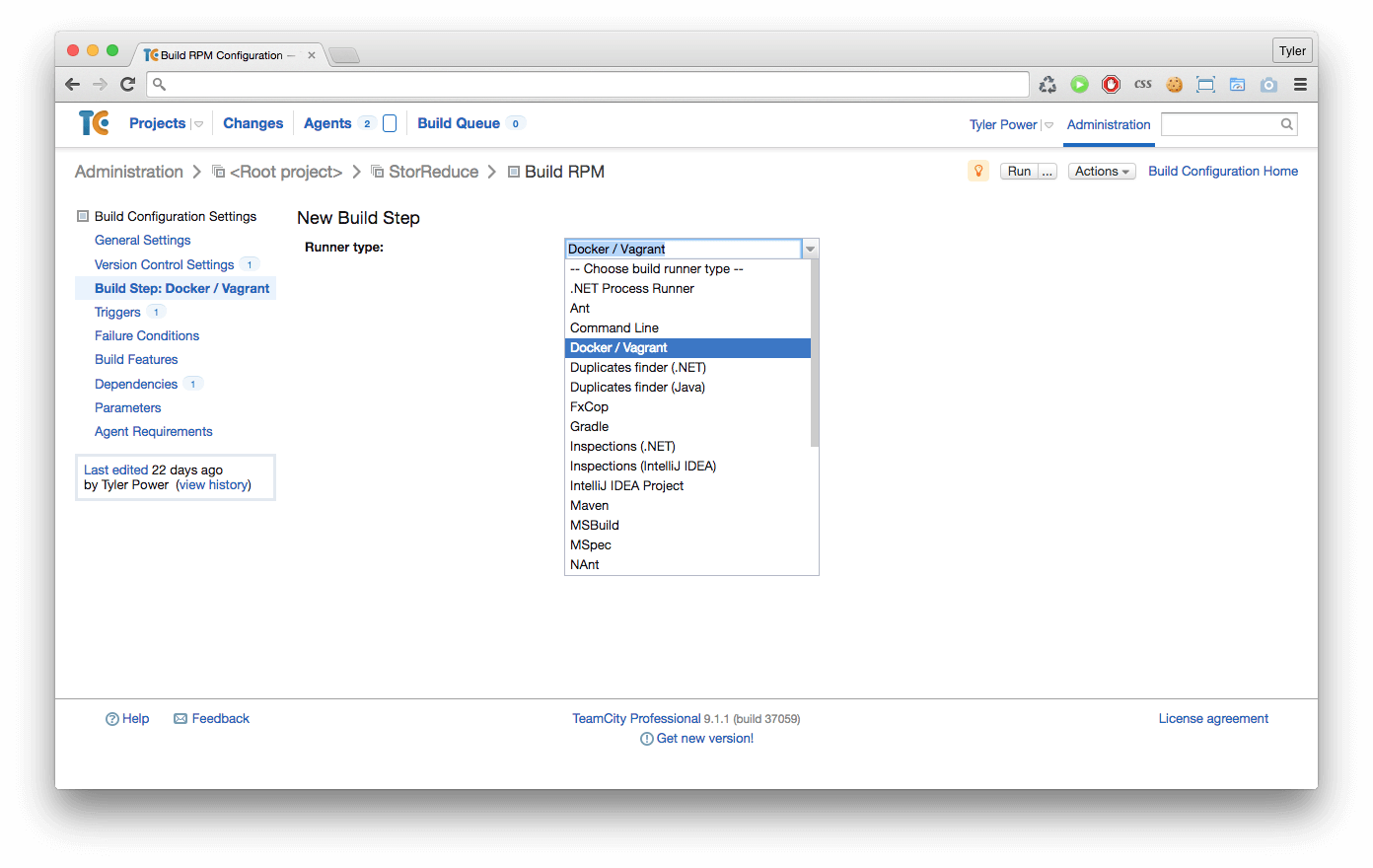

Once the TeamCity.Virtual plugin is installed TeamCity will start showing a new build step:

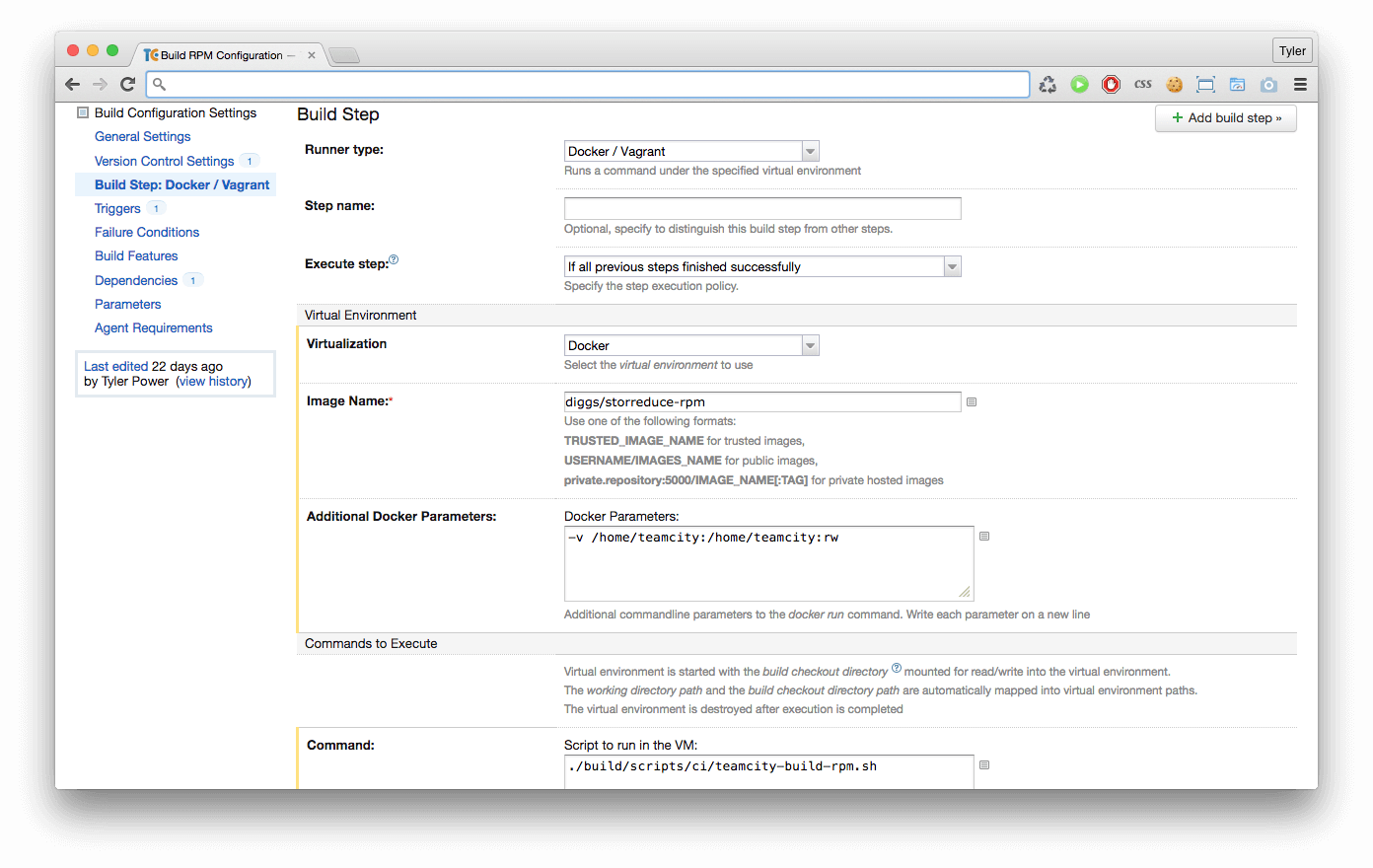

And after selecting it you can specify which Docker image to use, as well as the command to run:

For the build above, I mount the TeamCity home directory in to the Docker container via the -v flag, as our build script needs to run git commands to determine the version of our software (our versioning is managed via git tags), and in TeamCity the git checkout lives in the home directory.

The final link in the chain is to ensure you have a Docker image suited to the build you’re configuring. The build pictured is our RPM build, it compiles our binaries and produces an RPM. To do this we need to ensure rpmbuild is installed, as well as golang and supporting tools. We then push our built Docker image to the Docker Hub for access during the build. One of the nice things about the TeamCity.Virtual plugin is that it does a docker pull at the beginning of the build, so it will always pull down the latest version of your Docker images.

Here is the Docker file used for our RPM build - everything in here is something we no longer have to set up on each build server thanks to Docker:

# This docker image is used on CI for building RPMs

# awscli is installed for uploading to S3 after the build, as well as godep

# and rpm for the rpm build.

FROM centos:6

MAINTAINER StorReduce

# install EPEL repos

RUN yum -y install wget && \

wget http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm && \

yum -y install epel-release-6-8.noarch.rpm && \

rm epel-release-6-8.noarch.rpm

# install dev packages

RUN yum -y groupinstall 'Development Tools'

# install our dependencies

RUN yum -y install which && \

yum -y install tar && \

yum -y install hg && \

yum -y install git && \

yum -y install python-pip && \

yum -y clean all && \

pip install awscli && \

mkdir /root/.aws

# install go

RUN wget https://storage.googleapis.com/golang/go1.4.2.linux-amd64.tar.gz && \

tar -C /usr/local -xzf go1.4.2.linux-amd64.tar.gz && \

rm go1.4.2.linux-amd64.tar.gz

# configure go

RUN mkdir -p /go/src /go/bin && chmod -R 777 /go

ENV GOPATH /go

ENV PATH /usr/local/go/bin:/go/bin:$PATH

WORKDIR /go

# install godep

RUN go get github.com/tools/godep

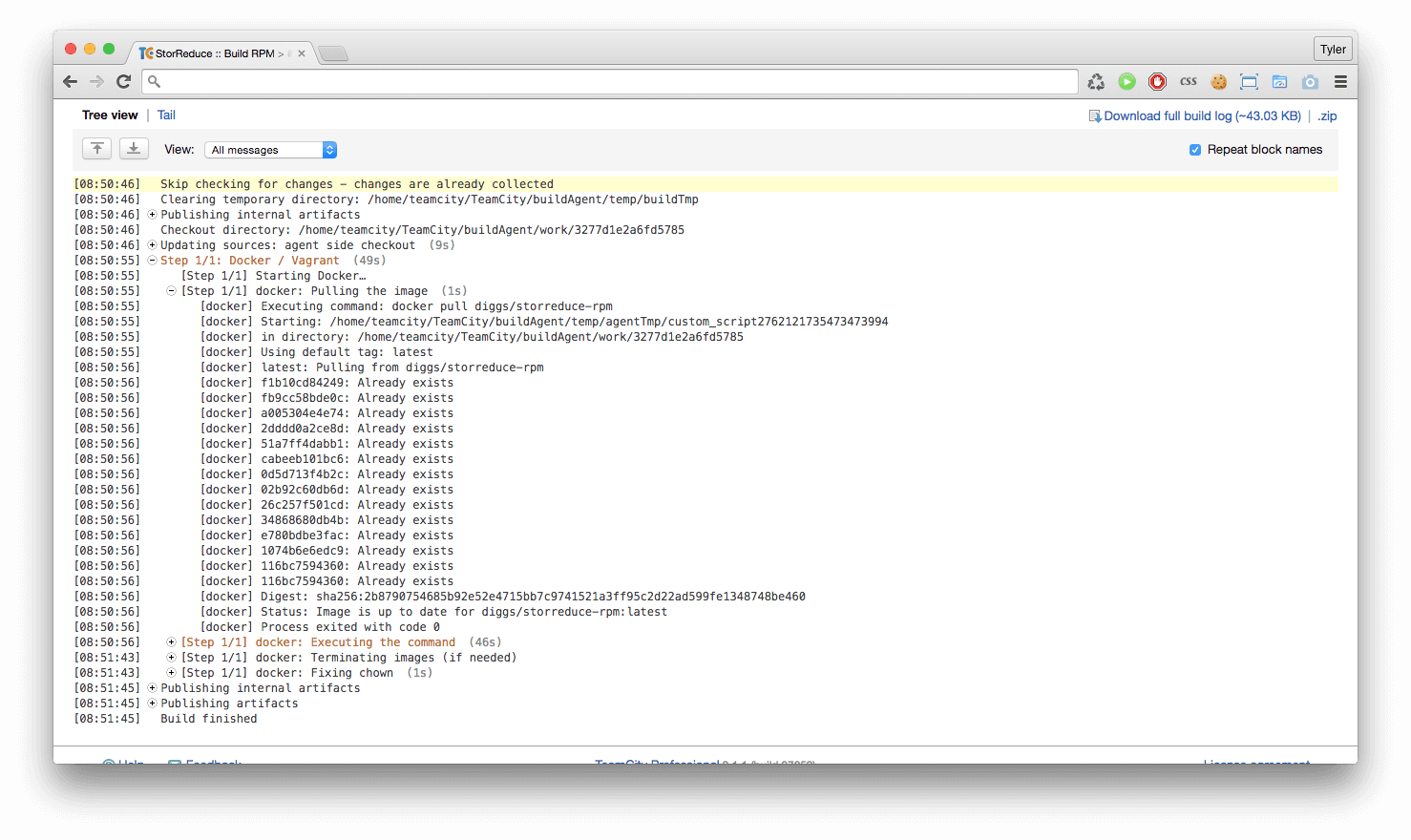

Finally, when the builds runs you get the same great logging output from TeamCity, here you can see the build executing inside the Docker container: